I’ve sat through a lot of sprint retrospectives.

If I’m honest, most of them felt like a well-rehearsed play.

A few thank-yous. A soft frustration. Maybe an idea or two for “what we’ll try next.”

We’d wrap up, feel vaguely accomplished, and go right back to working exactly the same way.

It was polite; even therapeutic at times. But deeply ineffective.

The stories changed every sprint. The patterns didn’t.

Same delivery friction. Same dependency chaos. Same features built without a clear understanding of what they were meant to solve.

And still: same retro(spective) format.

Over time, I stopped trusting retros. Not because the intent was wrong. But because most formats weren’t built to surface what teams actually need to see:

Not what happened. What keeps happening.

The agile ritual I trusted least

Retros became the agile ceremony I had the least faith in.

Not because reflection doesn’t matter, but because most retro formats prioritize performance over pattern.

We’re encouraged to balance positives and negatives. To stay solutions-oriented. To make sure everyone speaks. And to always leave with a few action items; no matter how superficial.

That might sound like a healthy practice. But in reality, it creates an environment where difficult truths get softened, if not erased.

Most retros don’t invite deep insight. They reward safe participation.

They produce noise — stickies, votes, tasks — but rarely signal.

And the deeper issues? What doesn’t get named doesn’t get fixed. So the cycle continues: same issues, different sprint.

I wasn’t chasing a better ritual

That’s what most retro formats are: a ritual.

I wanted a better way for us to see where we kept getting stuck.

The idea started years ago, in the middle of yet another post-retro rant. Someone stopped me and asked, “Okay, but what would you do instead?”

I had nothing. I think I said something hand-wavey about “looking for meta-patterns, not events.” It sounded smart. It meant nothing.

It took a few more years — of watching the same dysfunctions play out, of testing out alternate framings with teams, of living the same broken loops — to actually build the thing I wish I’d had then.

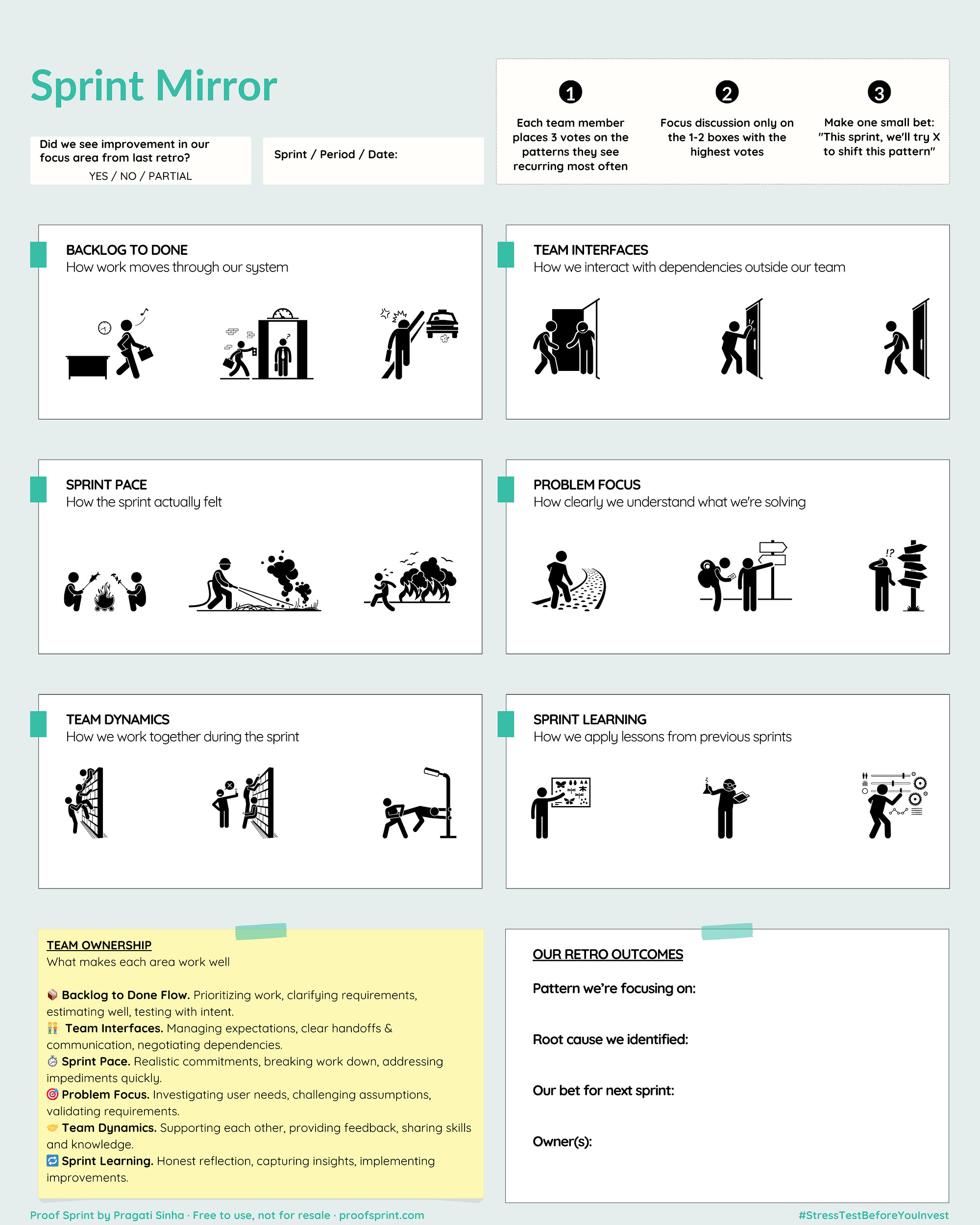

This is my answer: the Sprint Mirror.

What it does differently

This is not a retro board. It’s not a template. It’s a pattern recognizer.

It’s designed to help teams see what hasn’t changed, no matter how many sprints they’ve completed.

Because sprint dysfunction rarely shows up as one-off events. It lives in the shape of how we work. The invisible structures, habits, and handoffs we’ve learned to accept.

This tool helps you name those patterns. So you can stop treating symptoms, and start shifting the system.

Why these six boxes?

I tried other formats like 5-box, 7-box, and quadrant layouts. Different questions and different prompts. But this 6-box version held up best under pressure.

Each box reflects a distinct layer of team reality. Together, they give a full picture of sprint experience: technically, relationally, and systemically.

Let me walk you through why each one matters.

1. Backlog to Done Flow

How work moves through your system

This is the logistics box. It tracks the journey of a story from prioritization to done, not how fast it moved, but how cleanly:

Did we write clear requirements?

Estimate on time?

Test with the right scope?

Or did we push barely-ready stories through the system and call it delivery?

This box helps you look upstream. When stories are carried over, tested late, or expand mid-sprint, the problem usually isn’t capacity, it’s clarity.

This is where process friction shows up. Not as a blocker, but as an undercurrent. And if you don’t make space for it, the system keeps breaking quietly.

2. Team Interfaces

How we interact with people outside our team

This might be the most important box in the set.

I didn’t want a generic “communication” bucket. I wanted to name a specific reality of modern work: teams don’t build in isolation.

This box holds the pain of external dependencies. Waiting for input. Blocked on a handoff. Misaligned timelines.

It separates internal tension (how we function) from external friction (what we rely on but can’t control). That’s important because the strategies needed to shift each are different.

It also creates a cleaner feedback loop to leadership. Patterns in this box aren’t individual problems. They’re signals of organizational dysfunction.

3. Sprint Pace

How the sprint actually felt

This box tracks rhythm. Did we work with flow or in frantic bursts?

Steady pace? Or last-minute scramble? Work completed early? Or piled up on the last day?

This is where planning habits, realistic commitment, and facilitation style quietly show up.

Teams that normalize chaos often think it’s just “how we work under pressure.” But pressure isn’t always productive. And if the system requires constant adrenaline to hit its goals, it’s not a system.

This box helps teams notice that, and talk about it.

4. Problem Focus

How clearly we understand what we’re solving

This one’s subtle, but powerful.

Most retros focus on delivery mechanics. This one zooms out. It asks:

Did we solve a real problem, or just deliver a ticket?

Were we building for a user need, or chasing an internal deadline?

Did we question assumptions?

Or execute whatever was in the backlog without pause?

A team can have flawless execution and still ship something meaningless.

This box surfaces that tension before it becomes baked into the roadmap.

5. Team Dynamics

How we worked together during the sprint

This isn’t a “did we all get along?” check. It’s about how the team functioned together:

Were voices heard?

Were challenges welcomed?

Did we have each other’s backs?

It’s not a psychological safety score. It’s a lived signal.

When this box lights up, it often means someone was stretched too thin. Or sidelined. Or silently resentful. Those stories rarely make it into Jira. But they shape everything.

This box helps make them visible.

6. Sprint Learning

How we apply lessons from previous sprints

Every team claims they’re doing “continuous improvement.” This box tells you if that’s actually true.

Did we act on anything we learned last sprint? Or did we nod through the retro and forget it all by planning?

This is the team’s memory system. The loop that turns reflection into action.

Without it, each retro is an isolated event. With it, you get a traceable arc.

How teams use it

Each box includes three visual metaphors — simple, intuitive icons that represent common sprint patterns.

Every team member gets three votes. That’s not arbitrary. I designed it that way on purpose.

Here’s why:

It forces prioritization. Three votes are enough to surface patterns, but not so many that everything gets flagged. With six boxes, people have to be selective.

It creates signal, not noise. On a team of 5–9 people, three votes each produces 15–27 total data points — enough to reveal clear clusters without ties.

It levels the playing field. Everyone gets the same number of votes. It prevents one person from dominating the direction of the retro.

It encourages deeper thought. Studies in decision-making show that asking people for their “top 3” creates more considered, thoughtful choices than asking for just one — or allowing unlimited input.

After voting, the team steps back and asks:

Where are the votes clustering?

Where are we polarized?

What pattern is still pulling us off course?

Then: pick one box. One pattern. One bet.

What will we try to shift next sprint?

No five-point action list. Just focused intent.

Building a pattern library

The true power of the Sprint Mirror emerges after you’ve used it a few times.

When you run this exercise monthly (or as frequently as your team decides), something remarkable happens: you start building a visual record of recurring patterns. Like a time-lapse photograph of your team’s challenges.

After 3–4 sessions, take time to look back at your previous Mirror outcomes. Ask:

Which boxes consistently receive the most votes?

Have our bets actually shifted any patterns?

Are we seeing the same issues in different disguises?

This review creates a “pattern of patterns” aka a meta-level insight that’s impossible to see in any single retro.

Some teams find that monthly cadence works best for pattern recognition, giving enough data points without retro fatigue. Others prefer running it every sprint to maintain momentum. There’s no perfect frequency. The right cadence is the one that gives your team enough space to implement changes while still keeping patterns visible.

What matters most isn’t how often you use it, but that you’re consistent. Each Mirror session builds your team’s pattern recognition muscles. And over time, those muscles get strong enough to catch problems before they become embedded habits.

Embracing the negative

Some of you might wonder: “Isn’t focusing on problems negative? Shouldn’t we celebrate what’s going well too?”

Fair question. But here’s what I’ve learned:

Most retros already over-index on positivity. We’re conditioned to balance every critique with praise, often diluting the hard truths that need addressing. The Sprint Mirror isn’t about ignoring wins, it’s about finally giving proper attention to the patterns that keep holding us back.

The tool doesn’t create negativity; it simply makes existing friction visible. And in my experience, teams find it deeply relieving to finally name what everyone’s been feeling but not saying.

The most positive thing we can do isn’t pretending everything’s fine. It’s creating a space where real patterns can be seen, named, and changed together.

Why I designed it this way

I could have added a lot more to this tool.

Role-based prompts

Metrics dashboards

Action item tracking

Retro scoring

I chose not to. Because every extra feature adds weight, and this tool is meant to be stay light.

Simple, by design.

No process overhead.

No “agile theater.”

Just a tool that teams can run, repeat, and learn from (every sprint if they want to).

This is the retro I needed, but never had

I built the Sprint Mirror because I got tired of pretending that polite reflection was the same as progress.

Most teams don’t need another improvement backlog. They need a pattern detector.

They need a moment — not to talk about everything that happened — but to name what’s still happening.

The same blockers.

The same bottlenecks.

The same team pain that keeps getting papered over.

This tool doesn’t give answers. It gives shape. And sometimes, that’s all a team needs to find the courage to change what’s no longer working.

If it resonates, try it.

You don’t need a facilitator.

You don’t need training.

You just need the team and the willingness to look at what hasn’t changed.

The rest? Happens sprint by sprint.

Until next time,

Pragati

Curious how this plays out on real projects? Join a free Proof Lab.

For business analysts, product thinkers, and leaders who know: Shipping isn't the hard part. Knowing what's worth building is.